Gaming & Metaverse Platform Image Moderation

Comprehensive AI-powered image moderation solutions designed to protect young users, maintain community standards, and ensure brand safety across gaming platforms, virtual worlds, and emerging metaverse environments.

The Unique Challenge of Gaming Platform Moderation

Gaming and metaverse platforms present unprecedented moderation challenges that combine the complexity of traditional social media with the immersive, creative nature of virtual worlds. These platforms often serve diverse age groups, from children as young as 6 to adults, creating complex safety requirements where content appropriate for one demographic can be harmful to another. The interactive nature of gaming environments means that user-generated content appears not just in profiles but in virtual worlds, custom creations, and real-time interactions.

Major gaming platforms like Roblox, Minecraft, Fortnite Creative, VRChat, and emerging metaverse platforms process millions of user-generated images daily including avatars, custom skins, virtual world decorations, and social interactions. Unlike static social media posts, gaming content often involves real-time creation and sharing in immersive 3D environments where inappropriate content can have immediate impact on other users' experiences.

The stakes are particularly high for gaming platforms because of their popularity with minors and the potential for virtual environments to normalize inappropriate behaviors or expose children to harmful content. Regulatory scrutiny has intensified, with governments worldwide examining gaming platforms' child safety measures and content moderation practices. Failed moderation can result in age rating changes, platform bans, regulatory fines, and severe reputation damage.

Scale and Complexity

Leading gaming platforms moderate over 2 billion user-generated images monthly, including real-time avatar modifications, custom textures, virtual world decorations, and social sharing content across diverse age groups and global communities.

Avatar and Character Content Moderation

Avatar customization and character creation represent core features of modern gaming and metaverse platforms, allowing users to express creativity and identity through virtual representations. However, these systems can be exploited to create inappropriate representations, offensive imagery, or content that violates platform policies. Our specialized avatar moderation system analyzes both pre-created assets and user-generated combinations to ensure appropriate content across all user interactions.

The system evaluates avatar clothing, accessories, body modifications, and visual elements for appropriateness across different age groups. Advanced analysis can detect attempts to create nude or suggestive avatars through creative use of skin-colored clothing, transparent textures, or layered items that combine to create inappropriate effects. This prevents users from circumventing content policies through creative exploitation of avatar systems.

Beyond inappropriate sexual content, the system identifies offensive symbols, hate imagery, trademarked content used without permission, and references to violence, drugs, or other content inappropriate for younger users. The analysis considers both individual items and their combinations, as users may attempt to create offensive content by combining seemingly innocent elements.

Real-Time Avatar Analysis

Our avatar moderation processes over 50 million avatar combinations daily in real-time, ensuring that inappropriate content is caught instantly before other users can see it, maintaining safe virtual environments for all age groups.

The system also analyzes user-created textures, skins, and custom assets that may be uploaded to platforms supporting user-generated content. Advanced image analysis can detect hidden inappropriate imagery, subtle offensive references, or attempts to embed harmful content in seemingly innocent designs that could be used across multiple avatar elements.

- Real-time avatar combination analysis

- Inappropriate clothing and accessory detection

- Offensive symbol and hate imagery identification

- Trademark and copyright violation prevention

- User-generated texture and skin analysis

- Hidden content and steganography detection

- Age-appropriate content filtering

- Cultural sensitivity and regional compliance

Virtual World and Environment Safety

Virtual worlds and user-generated environments in gaming and metaverse platforms allow unprecedented creative expression but also create new vectors for inappropriate content and safety risks. Users can create virtual spaces, upload decorations, build structures, and design experiences that other players will encounter. Our comprehensive environment moderation system analyzes these virtual creations to ensure they maintain appropriate community standards while preserving creative freedom.

The system evaluates user-created textures, images, and decorative elements used in virtual environments for inappropriate content including explicit imagery, offensive symbols, or attempts to recreate real-world locations associated with violence or extremism. Advanced spatial analysis can identify when users attempt to create inappropriate shapes or arrangements using seemingly innocent building blocks or decorative elements.

Virtual world analysis extends beyond static content to evaluate player-created games, experiences, and interactive elements that may expose other users to inappropriate content or situations. This includes detecting virtual recreation of real-world violence, simulated inappropriate activities, or environments designed to bypass platform safety measures through creative interpretation of game mechanics.

The system also monitors virtual gathering spaces, social areas, and communication zones where users may share screens, display images, or create content intended for social interaction. These spaces require particular attention as they often serve as venues for both legitimate social interaction and potential harassment, inappropriate content sharing, or predatory behavior targeting younger users.

- User-generated environment content scanning

- Virtual structure and creation appropriateness analysis

- Texture and decoration content verification

- Spatial arrangement and pattern recognition

- Interactive experience content evaluation

- Social space and gathering area monitoring

- Screen sharing and display content filtering

- Real-world recreation detection and prevention

Child Safety and Age-Appropriate Content

Gaming and metaverse platforms have become primary social spaces for children and teenagers, making robust child safety measures not just beneficial but essential for legal compliance and ethical operation. Our specialized child safety system goes beyond traditional content filtering to understand the unique ways that inappropriate content can appear in gaming contexts and virtual environments where creative expression meets social interaction.

The system implements sophisticated age-based content filtering that recognizes different appropriateness standards for various age groups while maintaining engaging experiences. Content appropriate for teenagers may be filtered from younger users' experiences, while maintaining age-appropriate challenges and social interactions that support healthy development and learning through gaming.

Regulatory Compliance

Our child safety solutions help gaming platforms comply with COPPA, UK Age Appropriate Design Code, EU Digital Services Act child safety provisions, and emerging regulations across 40+ countries with specialized gaming safety requirements.

Advanced detection identifies content that may be specifically designed to appeal to or groom minors, including predatory behavior patterns, requests for personal information, attempts to move conversations to external platforms, and content designed to normalize inappropriate relationships or behaviors. The system can detect these patterns even when they appear through gaming-specific communication methods like virtual gestures, avatar positioning, or environmental messaging.

The system also monitors for content that may be traumatic or developmentally inappropriate for children, including realistic violence beyond game-appropriate fantasy violence, disturbing imagery, references to self-harm, or content that could contribute to unhealthy body image or social comparison issues particularly relevant in avatar-based social environments.

- Age-stratified content appropriateness filtering

- Predatory behavior pattern recognition

- Personal information request detection

- External platform contact attempt identification

- Grooming behavior and language analysis

- Developmentally inappropriate content screening

- Trauma and disturbing imagery prevention

- Body image and social comparison content management

Brand Safety and Intellectual Property Protection

Gaming and metaverse platforms often rely on brand partnerships, licensed content, and advertising revenue while simultaneously allowing user-generated content that could infringe on intellectual property or create brand safety concerns. Our comprehensive brand protection system ensures that user-generated content doesn't violate intellectual property rights while maintaining the creative freedom that makes gaming platforms engaging and commercially viable.

The system identifies unauthorized use of copyrighted characters, logos, brand imagery, and protected intellectual property in user-generated content including avatars, virtual world decorations, and custom creations. Advanced analysis can detect attempts to recreate protected content through user creativity, including building block recreations of copyrighted characters or subtle references that could still constitute trademark infringement.

Brand safety analysis ensures that user-generated content doesn't create inappropriate associations for advertising partners or licensed content providers. The system identifies content that could damage brand relationships, violate advertising standards, or create liability for platform operators while allowing legitimate user expression and creativity within appropriate boundaries.

Music and audio content integration requires special attention as users may attempt to incorporate copyrighted music, sound effects, or audio content through image-based instructions, lyric displays, or references to protected audio content. The system identifies these attempts and helps platforms maintain compliance with music licensing agreements and copyright law.

- Copyrighted character and imagery detection

- Trademark and logo usage monitoring

- Brand partnership content compliance

- Advertising safety and appropriateness verification

- Licensed content usage tracking

- Music and audio reference identification

- Intellectual property violation reporting

- Brand relationship protection and compliance

Real-Time Moderation and Community Management

Gaming and metaverse platforms require real-time moderation capabilities that can respond to inappropriate content and behavior instantly, before it can impact other users' experiences or safety. Unlike traditional social media where content can be reviewed after posting, gaming environments require immediate responses to maintain immersive, safe experiences for all participants, especially in multiplayer and social gaming contexts.

Our real-time moderation system processes content as it's created, providing instant feedback to users and automatic enforcement actions when necessary. The system can instantly hide inappropriate content, temporarily restrict user actions, or escalate serious violations to human moderators while maintaining seamless gaming experiences for compliant users.

Advanced behavioral analysis identifies coordinated harassment, griefing campaigns, or other community disruption patterns that may involve multiple users working together to circumvent individual content restrictions. This systemic approach protects communities from organized harassment while maintaining fair treatment for individual users who may make occasional mistakes.

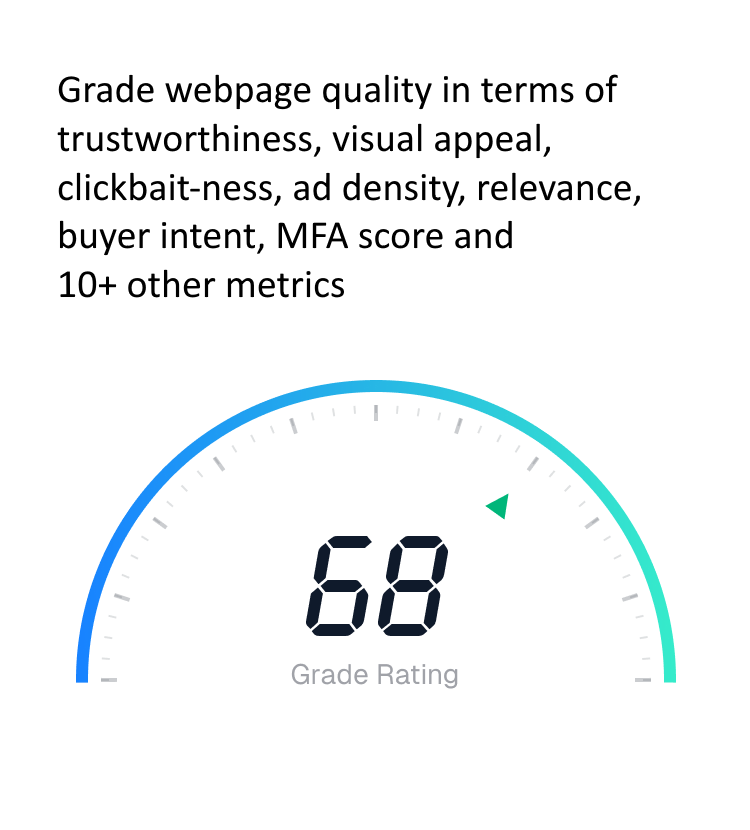

Community Health Impact

Gaming platforms using our real-time moderation report 48% reduction in user reports, 65% improvement in new user retention, and 52% increase in positive community interactions within 60 days of implementation.

Integration with existing community management tools, reporting systems, and customer support workflows ensures that automated moderation enhances rather than replaces human oversight. The system provides detailed documentation and evidence for appeals processes while maintaining user privacy and supporting transparent, fair community management practices.

- Instant content processing and response

- Automated enforcement action implementation

- Coordinated harassment and griefing detection

- Behavioral pattern analysis and prediction

- Human moderator escalation and workflow integration

- Community health metrics and analytics

- Appeals process support and documentation

- Transparent community management tools

Platform Integration and Scalability

Gaming and metaverse platforms require moderation solutions that can scale with massive user bases, handle real-time processing demands, and integrate seamlessly with complex gaming infrastructure including game engines, user-generated content systems, social features, and virtual economy components. Our comprehensive solution provides flexible integration options designed specifically for gaming platform requirements.

The system integrates with popular game development frameworks including Unity, Unreal Engine, and custom gaming platforms through comprehensive APIs and SDKs optimized for real-time performance. Low-latency processing ensures that moderation doesn't impact gameplay performance while maintaining thorough analysis of all user-generated content and interactions.

Scalable architecture automatically adjusts to handle traffic spikes during game launches, special events, or viral content trends without degrading performance or user experience. The system maintains consistent response times whether processing thousands or millions of content items per minute, ensuring reliable protection regardless of platform scale.

Comprehensive analytics provide gaming platform operators with detailed insights into community behavior, content trends, safety risks, and moderation effectiveness. This data supports evidence-based policy decisions, regulatory compliance reporting, and continuous improvement of community safety measures while demonstrating platform commitment to user protection.

Technical Performance

Our gaming-optimized moderation processes content with average latency under 50ms, handles over 1 billion content evaluations daily, and maintains 99.98% uptime across major gaming platforms worldwide.

- Game engine and framework integration (Unity, Unreal, custom)

- Real-time API with sub-50ms response times

- Automatic scaling for variable traffic loads

- Comprehensive gaming platform analytics

- Community health monitoring and reporting

- Regulatory compliance documentation

- Custom policy engine for gaming-specific rules

- 24/7 support and emergency response protocols

- A/B testing capabilities for community management

Ready to Create Safer Gaming Communities?

Join leading gaming and metaverse platforms using our specialized AI-powered image moderation to protect users, maintain community standards, and build trust in virtual worlds.

Try Free Demo Back to Home