Online Dating Application Image Moderation

Advanced AI-powered image moderation solutions designed to create safe, authentic, and trustworthy dating environments through comprehensive photo verification, fake profile detection, and inappropriate content filtering.

The Critical Importance of Dating App Safety

Online dating applications face unique moderation challenges that directly impact user safety, platform trust, and commercial success. Unlike other social platforms, dating apps facilitate real-world meetings between strangers, making user safety not just a platform concern but a potential matter of personal security. The consequences of inadequate moderation can include catfishing, harassment, fraud, and even physical harm to users who meet offline.

The dating industry processes millions of profile photos daily across platforms like Tinder, Bumble, Hinge, and emerging dating services. Each photo represents a potential point of deception, inappropriate content, or safety risk. Traditional social media moderation approaches fall short for dating applications because they must balance allowing personal expression and attractiveness with preventing deception and inappropriate content that could compromise user safety.

Dating platforms must build user confidence in profile authenticity while maintaining an engaging, visually appealing experience. Users need assurance that the person they're viewing and potentially meeting is real, represented accurately, and has appropriate intentions. Failed moderation can result in user churn, negative reviews, regulatory scrutiny, and serious safety incidents that can destroy platform reputation and business viability.

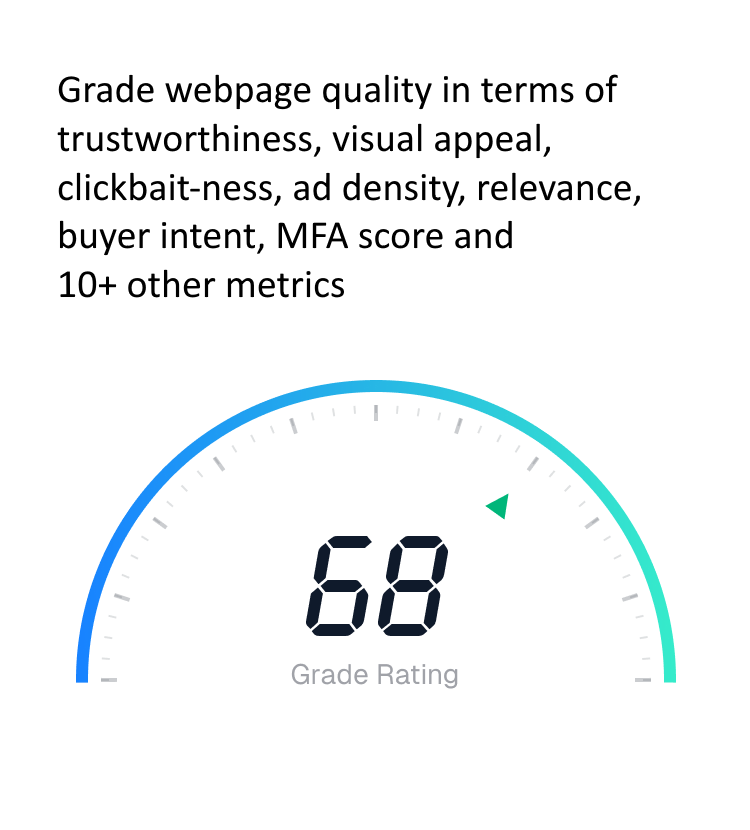

Trust and Safety Statistics

Dating apps with comprehensive image moderation see 67% higher user retention rates and 45% more successful matches, as users feel safer and more confident in profile authenticity and platform safety measures.

Fake Profile and Catfish Detection

Catfishing and fake profile creation represent the most significant threats to dating platform integrity. These deceptive practices not only harm individual users emotionally and sometimes financially, but also erode overall platform trust and user engagement. Our advanced detection system uses sophisticated AI to identify fake profiles, stolen photos, and deceptive representations with industry-leading accuracy.

The system performs reverse image searches across billions of web images to identify photos stolen from social media, modeling portfolios, or other sources. Advanced facial recognition technology can detect when multiple accounts are using the same person's photos, preventing both catfishing attempts and unauthorized use of legitimate users' images.

Beyond simple image matching, the system analyzes image quality, metadata, and editing patterns to identify professional photos, heavily filtered images, or manipulated content that may misrepresent a user's actual appearance. This analysis helps maintain authenticity while allowing normal photo enhancement that users expect in dating contexts.

Advanced Detection Capabilities

Our system maintains a database of over 10 billion images and can detect catfish attempts with 94% accuracy, including sophisticated operations using multiple stolen identities and carefully curated fake profiles.

Machine learning models analyze behavioral patterns associated with fake accounts, including photo upload patterns, profile completion behaviors, and interaction patterns that distinguish legitimate users from those operating with deceptive intent. This holistic approach catches not just obvious fakes but also sophisticated catfishing operations that might evade individual photo analysis.

- Reverse image search across billions of web photos

- Facial recognition for duplicate identity detection

- Professional photo and model image identification

- Image manipulation and heavy filtering detection

- Stolen social media photo identification

- Behavioral pattern analysis for fake account detection

- Age progression and photo timeline verification

- Cross-platform identity verification

Inappropriate and Explicit Content Filtering

Dating applications must carefully balance allowing users to present themselves attractively while preventing inappropriate content that could create uncomfortable experiences or violate platform policies. The challenge is particularly complex because dating contexts naturally involve some level of physical attraction and personal presentation that might be inappropriate in other social contexts.

Our specialized NSFW detection system is calibrated specifically for dating applications, providing nuanced classification that distinguishes between appropriate personal photos, suggestive content that may be acceptable in dating contexts, and explicitly inappropriate material that should be removed. This calibration ensures users can present themselves attractively without crossing lines that compromise platform safety or user experience.

The system recognizes that dating app users may share photos in swimwear, form-fitting clothing, or attractive poses that would be entirely appropriate for dating contexts but might be flagged inappropriately by generic content filters. Advanced contextual analysis ensures that legitimate dating photos aren't unnecessarily censored while still catching truly inappropriate content.

Beyond explicit content, the system identifies photos containing drugs, alcohol abuse, weapons, or other content that may indicate safety risks or policy violations. This comprehensive approach helps maintain platform standards while protecting users from potentially dangerous individuals who might reveal concerning behaviors through their photo choices.

- Dating-context calibrated NSFW detection

- Granular classification for different content types

- Context-aware analysis for appropriate personal photos

- Drug and alcohol abuse content identification

- Weapon and safety risk detection

- Harassment and threatening imagery filtering

- Age-inappropriate content prevention

- Cultural sensitivity and regional policy adaptation

Age Verification and Minor Protection

Protecting minors on dating platforms is both a legal requirement and a critical safety imperative. Dating applications must implement robust age verification systems to prevent underage users from accessing adult-oriented platforms while ensuring that legitimate adult users aren't unnecessarily hindered by verification processes. The consequences of failing to protect minors can include legal liability, regulatory sanctions, and serious safety incidents.

Our advanced age verification system uses sophisticated facial analysis to estimate user age from profile photos, flagging potentially underage users for additional verification. The system analyzes facial features, developmental markers, and contextual clues to identify users who may be younger than they claim, enabling platforms to implement additional identity verification requirements before allowing platform access.

Regulatory Compliance

Our age verification technology helps platforms comply with COPPA, GDPR age consent requirements, and regional age verification laws across 45+ countries, adapting automatically to local regulatory requirements.

The system also identifies photos that may contain minors in backgrounds or group photos, even when the primary profile owner is of age. This capability prevents inadvertent exposure of minors on dating platforms and helps maintain strict age-appropriate environments. Advanced detection can identify school settings, youth-oriented activities, or other contextual clues that might indicate underage individuals in photos.

Beyond direct age detection, the system identifies content that may be specifically attractive to or targeting minors, helping platforms maintain adult-only environments that comply with regulations and industry best practices for user safety and legal protection.

- Advanced facial age estimation technology

- Underage user identification and flagging

- Minor detection in group photos and backgrounds

- School and youth setting identification

- Content analysis for minor-targeting material

- Regulatory compliance across multiple jurisdictions

- Identity document verification integration

- Parental control and safety reporting systems

Photo Authenticity and Enhancement Detection

Dating success often depends on accurate representation, but users naturally want to present themselves in the best possible light. Our photo authenticity system strikes a balance between allowing normal photo enhancement and detecting excessive manipulation that could constitute deceptive representation. This nuanced approach maintains user satisfaction while promoting honesty and authentic connections.

The system can detect various levels of photo editing from basic filters and lighting adjustment to extensive facial manipulation, body modification, and deepfake technology. Rather than simply blocking edited photos, the system provides granular classification that allows platforms to implement policies appropriate for their user base and platform philosophy.

Advanced analysis identifies AI-generated or heavily manipulated photos that may not represent the user's actual appearance. This includes detection of facial swap technology, body modification filters, and synthetic image generation that could mislead potential matches about a user's actual appearance. The goal is maintaining reasonable authenticity expectations while allowing normal photo editing that users expect.

The system also analyzes photo recency and consistency across multiple profile photos, identifying profiles using significantly outdated photos or mixing photos from different time periods that might misrepresent current appearance. This temporal analysis helps ensure that users are representing their current selves rather than significantly older or edited versions.

- Multi-level photo editing detection

- AI-generated and deepfake image identification

- Facial modification and body enhancement detection

- Photo age and recency analysis

- Consistency analysis across multiple profile photos

- Filter and enhancement level classification

- Professional photography vs. personal photo identification

- Authenticity scoring and policy flexibility

Harassment and Safety Risk Prevention

Dating applications must proactively identify users who may pose safety risks to other users, including those who display concerning behaviors, harassment patterns, or potentially dangerous intentions. Our comprehensive safety analysis examines not just individual photos but patterns of behavior and content that may indicate risks to other users' safety and well-being.

The system identifies visual indicators of concerning behavior including photos with weapons, aggressive poses, substance abuse, or other content that may indicate higher risk profiles. Advanced behavioral analysis can detect patterns consistent with harassment, stalking, or predatory behavior by analyzing photo choices, upload patterns, and content themes across user profiles.

Photo metadata analysis can reveal concerning patterns such as photos taken near schools, playgrounds, or other locations that might indicate inappropriate interest in minors. The system also identifies photos containing personal information of others, threatening gestures, or content that might be used for doxxing or harassment purposes.

Proactive Safety Measures

Our safety risk assessment helps dating platforms identify potentially dangerous users before incidents occur, contributing to a 73% reduction in user safety reports and a 58% improvement in user confidence metrics.

Integration with law enforcement databases and sex offender registries provides additional layers of protection, while facial recognition technology can identify users who have been previously banned for safety violations attempting to create new accounts under different identities.

- Behavioral pattern analysis for risk assessment

- Weapon and threatening imagery detection

- Substance abuse and concerning behavior identification

- Location analysis for inappropriate interest patterns

- Personal information and doxxing prevention

- Harassment pattern recognition across platforms

- Integration with safety databases and registries

- Recidivist user identification and prevention

Implementation and Platform Integration

Implementing comprehensive image moderation for dating applications requires careful integration that maintains user experience while providing robust safety protections. Our solution provides flexible deployment options that work with popular dating app frameworks as well as custom platforms, ensuring that safety measures enhance rather than hinder the user experience.

Real-time photo processing ensures that inappropriate content is caught immediately upon upload, preventing it from ever appearing to other users. The system provides instant feedback to users about photo requirements, helping them understand and comply with platform policies while maintaining engagement and reducing frustration.

Advanced analytics provide platform administrators with comprehensive insights into user behavior patterns, safety risks, and moderation effectiveness. This data enables continuous policy refinement, helps identify emerging threats, and provides documentation for regulatory compliance and safety reporting requirements.

The system integrates with existing user reporting mechanisms, customer support workflows, and safety response procedures to create comprehensive protection ecosystems. When combined with human moderation teams, AI-powered analysis dramatically improves response times and accuracy for safety-critical decisions.

Platform Success Metrics

Dating platforms using our comprehensive moderation solution report 56% higher user satisfaction scores, 41% more successful matches, and 78% reduction in safety-related user reports within three months of implementation.

- Real-time photo processing and instant feedback

- Seamless integration with popular dating app platforms

- Comprehensive safety analytics and reporting

- Integration with existing moderation workflows

- User education and policy communication tools

- A/B testing capabilities for policy optimization

- 24/7 monitoring and emergency response protocols

- Regulatory compliance documentation and reporting

- Custom model training for platform-specific needs

Ready to Create a Safer Dating Environment?

Join leading dating platforms using our specialized AI-powered image moderation to build user trust, prevent fraud, and create authentic, safe spaces for meaningful connections.

Try Free Demo Back to Home