Online Forums & Communities Image Moderation

Comprehensive AI-powered image moderation solutions designed to maintain community standards, prevent harassment, ensure constructive discussions, and build thriving online communities across forums, discussion boards, and specialized community platforms.

The Foundation of Healthy Online Communities

Online forums and communities represent the backbone of digital discourse, providing spaces for specialized discussions, knowledge sharing, and community building around countless topics and interests. From technical forums like Stack Overflow and Reddit to specialized communities for hobbies, support groups, and professional networks, these platforms rely on community standards and effective moderation to maintain productive, respectful environments that encourage meaningful participation and knowledge exchange.

The challenge facing forum moderators is maintaining the delicate balance between free expression and community standards. Unlike social media platforms focused on personal sharing, forums center on topical discussions where inappropriate images can derail conversations, create hostile environments, and drive away valuable community members. The stakes are particularly high for niche communities where reputation and expertise matter, making community health essential for platform survival and growth.

Modern forums must handle diverse content types including technical diagrams, infographics, memes, personal photos, and professional imagery, each requiring different moderation approaches and community standards. The challenge is compounded by the global nature of online communities, where cultural differences in appropriate content create complex moderation decisions that must balance inclusivity with community standards.

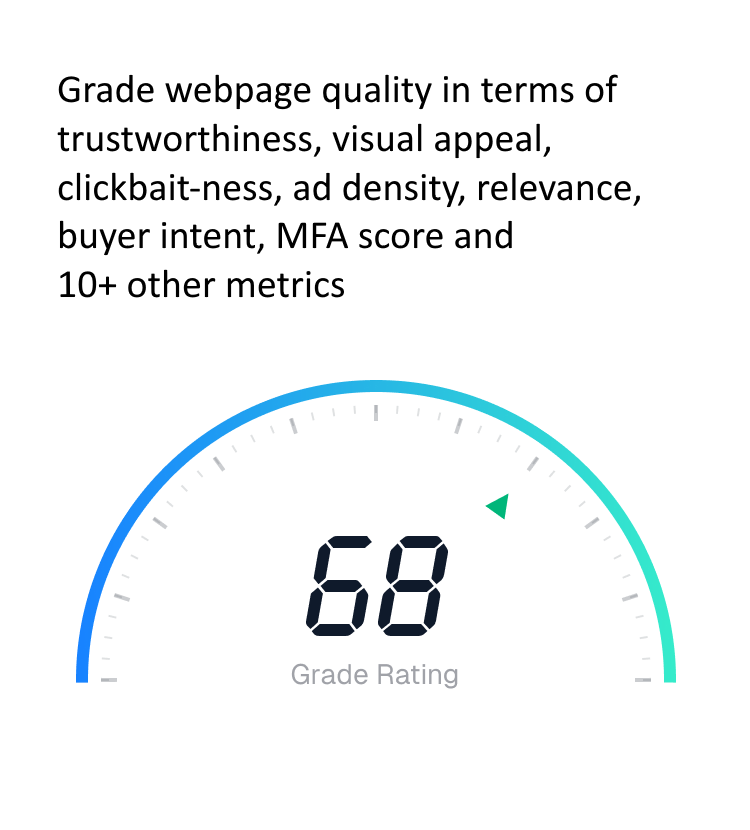

Community Health Impact

Forums with effective image moderation see 58% higher user retention rates, 73% more quality contributions, and 41% faster resolution of community disputes, creating self-sustaining communities that thrive long-term.

Harassment Prevention and User Safety

Online harassment through images represents a persistent threat to community health, including doxxing through personal photos, targeted harassment campaigns, intimidating imagery, and coordinated attacks designed to silence or drive away community members. Our comprehensive harassment prevention system identifies and prevents image-based harassment while preserving legitimate discussion and personal expression that makes communities valuable and engaging.

The system detects attempts to share personal information through images including screenshots of private conversations, personal documents, addresses, phone numbers, and other identifying information that could be used for harassment or real-world harm. Advanced OCR technology combined with contextual analysis can identify even subtle attempts to expose private information or coordinate harassment campaigns against community members.

Coordinated harassment detection identifies patterns where multiple users share similar threatening or intimidating images, participate in organized harassment campaigns, or attempt to use community platforms to organize off-platform harassment. This systemic approach protects vulnerable community members while maintaining fair treatment for users engaged in legitimate community discussions and debates.

Proactive Protection

Our harassment prevention system identifies potential harassment campaigns in their early stages, preventing 84% of coordinated attacks before they can significantly impact targeted community members or overall community health.

The system also identifies imagery designed to intimidate or threaten specific individuals or groups, including veiled threats, symbolic violence, and content designed to create fear or anxiety among community members. This protection is particularly important for communities discussing controversial topics or providing support for vulnerable populations who may be targeted by bad actors.

- Personal information and doxxing prevention

- Coordinated harassment campaign detection

- Threatening and intimidating imagery identification

- Private conversation screenshot detection

- Cross-platform harassment pattern recognition

- Vulnerable user protection protocols

- Real-time threat assessment and escalation

- Community safety reporting and documentation

Topic-Relevant Content and Quality Control

Forum communities thrive on relevant, high-quality discussions that provide value to participants seeking information, advice, or engagement around specific topics. Our intelligent content relevance system helps maintain community focus by identifying off-topic images, low-quality content, spam imagery, and irrelevant material that can disrupt productive discussions and degrade community value over time.

The system analyzes image content in relation to forum topics, discussion threads, and community guidelines to ensure posted images contribute meaningfully to ongoing conversations. Advanced image recognition can identify technical diagrams, product photos, relevant illustrations, and other content that supports productive discussion while flagging random images, unrelated memes, and attention-seeking content that disrupts community focus.

Quality assessment identifies low-resolution images, poorly composed photos, or content that doesn't meet community standards for helpful, informative contributions. This quality control helps maintain professional standards in technical communities while preserving accessibility for users who may not have professional photography skills but contribute valuable content and insights.

Spam and promotional content detection identifies attempts to use image sharing for commercial promotion, affiliate marketing, or other commercial activities that may violate community policies around self-promotion and commercial content. The system can distinguish between legitimate sharing of relevant products or services and exploitative commercial spam that damages community trust and value.

- Topic relevance analysis and enforcement

- Image quality assessment and standards

- Spam and promotional content identification

- Technical diagram and educational content recognition

- Community guideline compliance verification

- Discussion thread context analysis

- Value-added content promotion

- Commercial activity and self-promotion detection

Hate Speech and Extremist Content Prevention

Online forums can become breeding grounds for hate speech, extremist ideologies, and radicalization if not properly moderated, particularly in communities discussing controversial topics or serving diverse global audiences. Our comprehensive hate speech detection system identifies offensive imagery, extremist symbols, and content designed to promote hatred or discrimination while preserving legitimate political discourse and controversial but legal discussions.

The system recognizes hate symbols, extremist imagery, and visual references used to promote discrimination based on race, religion, gender, sexual orientation, nationality, or other protected characteristics. Advanced analysis can identify both obvious hate symbols and coded imagery, dog whistles, and subtle references that may not be immediately apparent to human moderators but serve to promote extremist ideologies within communities.

Contextual analysis distinguishes between hate speech and legitimate discussion of controversial topics, historical education, news coverage, or academic analysis that may involve similar imagery but serves educational rather than promotional purposes. This nuanced approach ensures that communities can discuss difficult topics while preventing exploitation by extremist groups seeking to recruit or radicalize community members.

Community Protection

Forums implementing our hate speech prevention see 76% reduction in extremist content, 89% improvement in community diversity metrics, and significantly higher satisfaction scores among minority community members.

The system also identifies recruitment imagery, propaganda materials, and content designed to normalize extremist viewpoints or gradually introduce radical ideologies to community members. This proactive approach prevents communities from being gradually transformed into extremist spaces while maintaining openness to diverse legitimate political and social viewpoints.

- Hate symbol and extremist imagery recognition

- Coded hate speech and dog whistle detection

- Recruitment and propaganda material identification

- Contextual analysis for legitimate controversial discussion

- Gradual radicalization pattern recognition

- Protected group targeting identification

- Educational vs. promotional content distinction

- Community diversity protection measures

Age-Appropriate Community Management

Many forum communities serve mixed age groups or have specific age demographics that require tailored content moderation approaches. Family-friendly forums, educational communities, and platforms serving minors require comprehensive age-appropriate content filtering, while adult communities need protection from inappropriate content that could violate platform policies or legal requirements in various jurisdictions.

The system implements sophisticated age-appropriateness analysis that considers community demographics, stated age policies, and legal requirements across different jurisdictions. Content that might be acceptable in adult-oriented technical forums could be inappropriate for educational communities or family-friendly discussion spaces, requiring nuanced policy implementation that reflects community values and legal obligations.

Advanced detection identifies content that may be specifically designed to appeal to or groom minors, including predatory behavior patterns, inappropriate relationship discussions, or content that attempts to normalize inappropriate interactions with younger community members. This protection is particularly important for communities focused on education, gaming, or other topics that naturally attract younger participants.

The system also manages content warnings, age gates, and filtering systems that allow communities to provide nuanced access controls based on user age, community membership level, and content sensitivity. This approach enables communities to serve diverse audiences while maintaining appropriate safety protections for all participants.

- Age-demographic specific content filtering

- Community policy adaptation for mixed age groups

- Predatory behavior pattern recognition

- Minor targeting and grooming prevention

- Content warning and age gate implementation

- Jurisdiction-specific legal compliance

- Educational content appropriateness verification

- Family-friendly community protection

Community Self-Governance and Democratic Moderation

Many successful online communities rely on community self-governance and democratic moderation approaches where community members participate in moderation decisions, vote on policy changes, and help maintain community standards through collective action. Our intelligent system supports these democratic approaches by providing tools for community voting, transparent moderation, and collective decision-making that preserves community autonomy while ensuring consistent policy enforcement.

The system provides community voting tools for borderline content decisions, enabling communities to collectively determine appropriateness of questionable images rather than relying solely on administrator judgment. Advanced analytics help identify when community consensus exists and when additional expert review may be needed for complex moderation decisions that involve cultural sensitivity, legal concerns, or technical policy interpretation.

Transparent moderation logs and audit trails enable community members to understand and review moderation decisions, contributing to community trust and accountability. The system provides detailed reasoning for automated decisions while enabling human moderators to document their thinking for community review and feedback, fostering transparency that builds community confidence in moderation processes.

Community Engagement

Communities using democratic moderation tools see 67% higher member satisfaction with moderation decisions and 45% more active participation in community governance, creating stronger, more resilient community cultures.

Appeal processes and community mediation tools provide structured approaches for resolving moderation disputes while preserving community cohesion. The system facilitates productive dialogue between community members, moderators, and administrators to resolve conflicts and improve policies based on community experience and evolving needs.

- Community voting on borderline content decisions

- Transparent moderation logs and decision documentation

- Democratic policy development and amendment processes

- Community consensus analysis and measurement

- Appeal and mediation process facilitation

- Member satisfaction and trust metrics

- Community governance analytics and insights

- Collaborative policy enforcement tools

Platform Integration and Customization

Online forums and communities operate on diverse platforms including Reddit, Discord, traditional forum software like vBulletin and phpBB, specialized community platforms, and custom-built solutions. Our comprehensive moderation system provides flexible integration options designed to work seamlessly with existing community infrastructure while preserving unique community cultures and established moderation workflows.

The system integrates with popular community platforms through comprehensive APIs, plugins, and middleware solutions that minimize implementation complexity while providing full-featured moderation capabilities. Integration preserves existing community features, user experiences, and administrative workflows while adding advanced AI-powered moderation that enhances rather than replaces human community management.

Customizable policy engines enable communities to implement unique moderation standards that reflect their specific focus areas, community values, and user demographics. Technical forums may prioritize diagram quality and relevance, while creative communities might emphasize originality and artistic merit, requiring different analytical approaches and policy frameworks tailored to community needs.

Scalable architecture adapts to community size and activity levels, providing consistent performance whether serving hundreds or millions of community members. The system handles traffic spikes during viral discussions or community events without degrading performance, ensuring that moderation never becomes a bottleneck for community growth or engagement.

Flexible Implementation

Our forum moderation solution supports over 200 community platforms and custom implementations, processing 500+ million community posts monthly while maintaining 99.9% uptime and sub-100ms response times.

- Multi-platform integration (Reddit, Discord, phpBB, custom)

- Customizable policy engines for unique community needs

- Scalable architecture for communities of all sizes

- Preserve existing workflows and user experiences

- Community-specific analytics and insights

- Flexible deployment options (cloud, on-premises, hybrid)

- 24/7 support and community management consultation

- Regular updates and feature enhancement

- A/B testing for policy optimization

Ready to Build Healthier Online Communities?

Join leading forums and communities using our AI-powered image moderation to maintain community standards, prevent harassment, and create thriving spaces for meaningful discussion and knowledge sharing.

Try Free Demo Back to Home