Welcome to the new standard in digital safety. Our state-of-the-art Image Moderation API empowers platforms to automatically detect and filter inappropriate, unsafe, or prohibited visual content in real-time. From social networks to e-commerce marketplaces, we help you protect your users, uphold community standards, and safeguard your brand's reputation at scale.

Powered by advanced deep learning models, our API delivers unparalleled accuracy across dozens of moderation categories, including NSFW content, violence, hate symbols, and text in images. Integrate in minutes and gain a powerful, scalable solution for managing user-generated content effortlessly.

99.5% accuracy in NSFW detection

99.9%+ enterprise-grade uptime

Under 200ms processing per image

20+ distinct moderation categories

Process millions of images daily

REST API & SDKs for Python, JS, PHP

Discover the powerful, nuanced features that make our Image Moderation API the ultimate solution for ensuring digital safety and brand integrity.

Protecting your community from adult content is the cornerstone of content moderation. However, not all adult content is the same, and a one-size-fits-all approach can lead to poor user experience or overly aggressive censorship. Our API provides a highly granular Not Safe For Work (NSFW) detection system that differentiates between various levels of sensitive content, allowing you to tailor your moderation policy with precision.

Our model is trained to identify and classify content into distinct categories:

Harmful content isn't always visual. Users frequently embed offensive messages, hate speech, or personal information within images like memes, screenshots, or posters. Without the ability to read this text, your moderation efforts are incomplete. Our API integrates a powerful Optical Character Recognition (OCR) engine with a sophisticated Natural Language Processing (NLP) model to moderate text found within images.

The process is seamless. First, our OCR technology accurately extracts any text from the image, regardless of font, size, or orientation. Then, our NLP model analyzes this text for violations, including:

Brand safety extends beyond just avoiding nudity and violence. Your brand's reputation can be damaged by association with a wide range of sensitive or controversial subjects. Our API's object and scene detection capabilities allow you to create a "digital immune system" that protects your brand from undesirable content adjacencies.

Our models are trained to identify a vast library of objects and concepts that can pose a risk to brand image, such as:

Managing images with people requires a delicate balance between user expression, privacy rights, and policy enforcement. Our API provides a suite of face-related tools to help you navigate this complex landscape. The core function is highly accurate face detection, which returns the precise coordinates (bounding boxes) for every human face in an image.

This core capability enables several critical applications:

The rise of powerful generative AI models like DALL-E, Midjourney, and Stable Diffusion presents a new and evolving threat to digital trust. Distinguishing between real and synthetic media is now essential for combating misinformation, fake profiles, and non-consensual imagery. Our API is at the forefront of this challenge, offering a specialized model designed to detect AI-generated images.

Our deepfake detection model doesn't rely on simple watermarks. Instead, it analyzes images at a pixel and frequency level to identify the subtle artifacts, statistical inconsistencies, and hidden patterns that are hallmarks of the generative process. The API provides a clear confidence score, indicating the likelihood that an image is synthetic. Key benefits include:

For full list of our API endpoints and their specification, visit API Documentation

{

"status": "success",

"request_id": "8a3fde9b-3a85-4c67-af41-2e6844358a9e",

"moderation_classes": [

{

"class_name": "Suggestive / Racy",

"confidence": 0.965

},

{

"class_name": "Explicit Nudity",

"confidence": 0.012

},

{

"class_name": "Weapons",

"confidence": 0.891

},

{

"class_name": "Violence",

"confidence": 0.045

},

{

"class_name": "Hate Symbols",

"confidence": 0.003

}

],

"ocr_results": {

"detected_text": "GET RICH QUICK! CLICK HERE!",

"text_moderation": [

{

"class_name": "Spam",

"confidence": 0.98

},

{

"class_name": "Profanity",

"confidence": 0.15

}

]

},

"face_detection": {

"face_count": 2,

"faces": [

{

"bounding_box": [112, 45, 201, 138],

"celebrity_prediction": {

"name": "Not a celebrity",

"confidence": 0.99

}

},

{

"bounding_box": [350, 88, 430, 172],

"celebrity_prediction": {

"name": "Jane Doe",

"confidence": 0.92

}

}

]

},

"generative_ai_detection": {

"is_ai_generated": true,

"confidence": 0.95,

"possible_source": "Midjourney"

},

"credits_remaining": 9875

}import requests

import json

# Your API key and the URL of the image you want to moderate

api_key = "YOUR_API_KEY"

image_url = "https://example.com/images/user_uploaded_image.jpg"

# The API endpoint

moderation_api_url = "https://api.imagemoderationapi.com/v1/moderate"

headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

payload = {

"image_url": image_url,

"models": ["nsfw", "ocr", "weapons", "faces", "deepfake"]

}

try:

response = requests.post(moderation_api_url, headers=headers, json=payload)

response.raise_for_status() # Raise an exception for bad status codes (4xx or 5xx)

# Print the JSON response

moderation_results = response.json()

print(json.dumps(moderation_results, indent=2))

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")

See how our AI-powered Image Moderation API provides critical solutions for businesses across diverse sectors, ensuring safety, compliance, and brand integrity.

Social networks live and die by the quality of their user-generated content (UGC). With billions of images uploaded daily, manual moderation is impossible. Our API provides the first and most critical line of defense, automatically scanning every profile picture, post, and story in real-time. It instantly flags or removes content violating community standards, such as nudity, graphic violence, hate speech embedded in memes, and self-harm imagery. This automation drastically reduces the burden on human moderators, allowing them to focus on nuanced cases, while creating a safer and more positive environment that encourages user engagement and retention.

Trust is paramount in e-commerce. A marketplace filled with prohibited items, counterfeit goods, or inappropriate product images quickly loses credibility. Our Image Moderation API helps platforms like eBay or Etsy maintain a clean and trustworthy shopping environment. It automatically scans user-uploaded listings to detect prohibited items (weapons, drugs), nudity (inappropriate apparel photos), and even text in images that violates policies (e.g., directing users off-platform). By ensuring listing quality and compliance at scale, our API protects buyers, legimitizes sellers, and ultimately secures the platform's revenue stream.

Safety and authenticity are critical for user trust on dating apps. Our API helps platforms like Tinder or Bumble enforce content policies from the moment a user signs up. It scans profile pictures to block explicit nudity, gore, and images containing hate symbols or firearms. Furthermore, it can be integrated into private messaging systems to detect and block the transmission of unsolicited explicit images in real-time, a major pain point for users. By fostering a respectful and secure environment, dating apps can improve user experience, increase match quality, and build a reputation for safety.

In the immersive worlds of online gaming and the metaverse, user expression is key, but it must be balanced with safety. Our API is essential for moderating visual assets created and shared by players. This includes custom avatars, user-designed emblems, guild logos, and in-game screenshots. The API can automatically reject assets that contain nudity, offensive symbols, or hate speech. This prevents toxic behavior, protects younger players from exposure to inappropriate content, and ensures the game world remains aligned with the developer's vision and age rating (e.g., ESRB, PEGI).

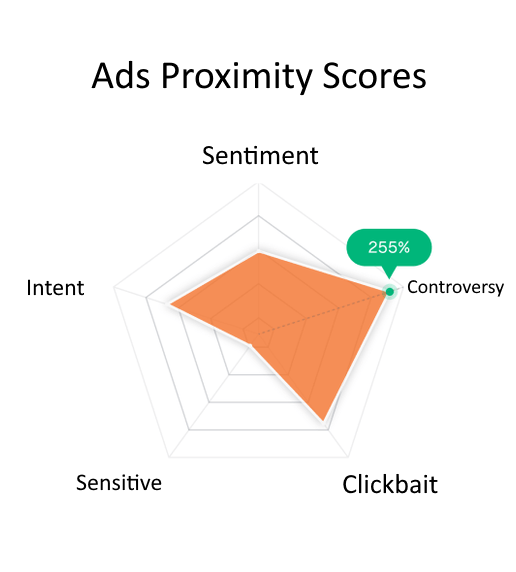

Brand safety is the lifeblood of the digital advertising ecosystem. Advertisers need assurance that their brands won't appear alongside harmful or inappropriate content, and publishers need to ensure the ads they display are compliant and safe for their audience. Our API serves both sides. It scans ad creatives before they go live to detect policy violations like nudity, weapons, or deceptive text (via OCR). It also analyzes publisher content where ads will be placed, flagging unsafe images to prevent negative brand association. This automated vetting process protects ad spend, increases advertiser confidence, and preserves publisher integrity.

Cloud storage providers have a legal and ethical responsibility to prevent their platforms from being used to store and distribute illegal material, most notably Child Sexual Abuse Material (CSAM). Our API provides a powerful, automated tool to help meet this obligation. It can be configured to scan all uploaded images against specialized models trained to detect CSAM and other illegal content with extremely high accuracy. This proactive scanning helps platforms identify and report illegal activity to authorities like NCMEC, mitigating legal risk and demonstrating a firm commitment to corporate responsibility.

From niche hobbyist forums to large community platforms like Reddit or Discord, moderating image-based submissions is crucial for maintaining a healthy environment. Trolls and bad actors often use shocking or offensive images to derail conversations and harass members. Our API acts as a vigilant gatekeeper, automatically analyzing every image posted to a forum. It can place potentially sensitive content (e.g., suggestive, minor gore) behind a spoiler tag or send it for human review, while instantly removing content that clearly violates the rules (e.g., pornography, hate symbols). This empowers community moderators and keeps discussions on topic and safe for all members.

Creating a safe digital classroom is non-negotiable. EdTech platforms used by K-12 students must be free of any inappropriate content. Our Image Moderation API is a critical safety layer for these environments. It can scan student avatars, project submissions, and any images shared on school-sanctioned forums or messaging boards. The system automatically blocks any content featuring nudity, violence, drugs, or other subjects unsuitable for children. This provides peace of mind for parents and educators, ensures compliance with child safety regulations like CIPA, and allows students to learn and collaborate in a secure online space.

Financial services, crypto exchanges, and other regulated industries rely on Know Your Customer (KYC) processes to verify user identities. This often involves users uploading photos of their government-issued ID and a selfie. Our API enhances this process by performing quality and content checks. It can reject submissions that are not actual faces (e.g., photos of celebrities, cartoons), flag IDs where the photo is obscured, and use deepfake detection to identify fraudulent attempts to bypass liveness checks. This adds a layer of automated security, reduces manual review time, and strengthens the integrity of the identity verification workflow.

In telemedicine, patients often upload images for diagnostic purposes, such as a photo of a skin rash or injury. While essential for care, this process carries the risk of users accidentally or intentionally uploading irrelevant and explicit content, which could create a hostile work environment for healthcare professionals. Our API can be used to pre-screen these images. It can be tuned to allow for clinical nudity (which it is trained to differentiate from pornographic content) while flagging and blocking any sexually explicit or otherwise inappropriate images that are clearly not medically relevant. This protects medical staff and ensures the platform is used for its intended clinical purpose.

Hear from leaders who use our API to protect their communities and brand.

Explore our API with a free demo. See the power of AI moderation for yourself. No credit card required.